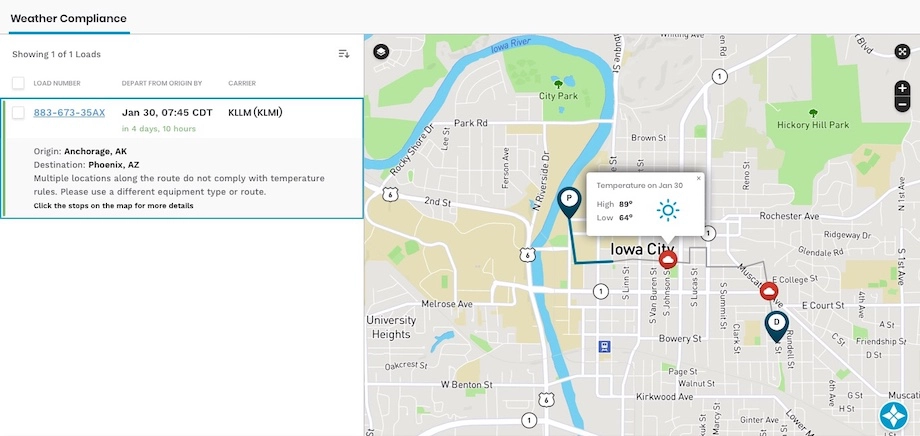

Temperature Compliance

Customers who have goods that are highly dependent on temperature along the route, have to be pre-notified of any Reefer requirement or alerted in case of unacceptable temperature variations during the transit, to save the goods, energy and cost

Worked On

Backend Data pipelines, Spark Batch and Streaming Job

Tech Stack

Spark, Kafka, Hadoop, DynamoDB, Elasticsearch, Redshift, Airflow, Python, Ruby

Date

August, 2019

Challenge

Alerting users of the temperature along the route in-advance and suggesting if a Reefer container would be required for the transit

Solution

A combination of streaming and batch pipelines which proactively keeps track of the temperature variations across different areas and alert if a load enters an area with adverse conditions. This also predicts if an expensive Reefer container is required for transport based on historical info, weather & temperature considerations across the route.

About Temperature Compliance

Shippers with goods adversely affected by temperature along the route, especially food items, need to plan beforehand.

- Companies often have a difficult time efficiently managing their refrigerated loads as they do not know when to use them and when not to.

- Sustainability - Optimizing for reefer loads does not only save huge costs it also saves energy overall.

- Some companies do not want their loads to stop in areas with adverse temperature conditions

Solution

I designed the architecture, implemented the end-to-end data pipeline and backend microservice for API consumption. Using Spark, I came up with a batch job (Python) that fetches the data from the warehouse periodically for temperature impact computation. Ad-hoc triggers from the application layer were supported using a ruby background worker, which pushed the loads to Kafka. A spark streaming job (Python) consumes the loads from Kafka, fetches the predicted route from a NoSQL store, gets the temperature forecast data for different areas from HERE APIs, computes the temperature impact and stores the result in the NoSQL store and Elasticsearch. From the application, requests get served by a microservice written in ruby which will read the results/predictions from a NoSQL store and Elasticsearch.